Vision for autonomous systems.

My research investigates how to design computer vision algorithms and systems that can automatically detect unknown or novel objects in a way that is both fast and robust. The main theme of my work is to enable machines learn about things around them (e.g., objects, places, people) holistically, through observation and interaction, in a matter similar to how humans learn.

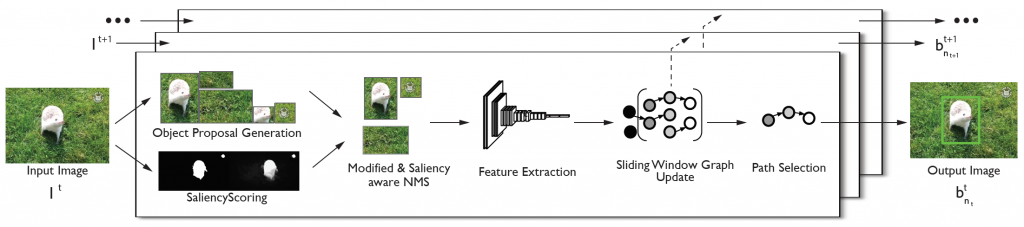

We developed Unsupervised Foraging of Objects (UFO), which is an unsupervised, salient object discovery method designed for standard cameras. UFO leverages a parallel discover-prediction paradigm, permitting it to discover arbitrary, salient objects frame-by-frame (i.e., online), which can help robots to engage in scalable object learning. This work was published at R:SS Pioneers 2019, RA-L 2020, and ICRA 2020.

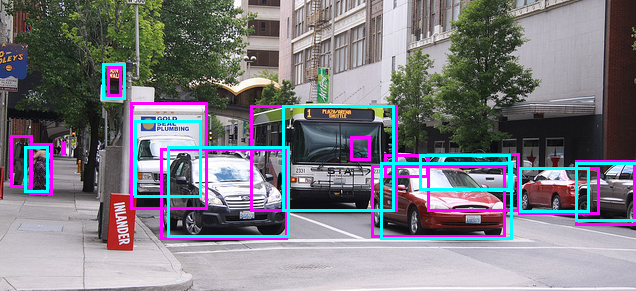

We evaluated state-of-the-art object proposal algorithms using naturalistic datasets from the robotics community to provide insight into certain weaknesses of object proposal algorithms, which can be used to gauge how they might be suitable for different robotics applications. This work was published at IROS 2019.

My lab collaborated with the Cognitive Robotics Lab (lead by Henrik Christensen) to compete at RoboCup 2017 in Nagoya, Japan. Our goal was to develop the AI for the Toyota Human Support Robot (HSR) to autonomously complete a variety of household tasks. One challenge in particular involved sorting objects, some of which were not known beforehand — this ultimately inspired me to pursue my dissertation topic!

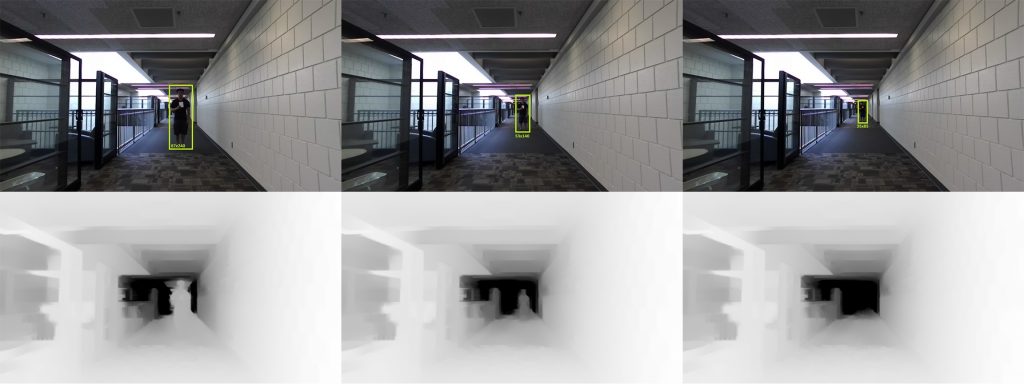

We developed Salient Depth Partitioning (SDP), a depth-based region cropping algorithm, devised to be easily adapted to existing detection algorithms. SDP is designed to give robots a better sense of visual attention, and to reduce the processing time of pedestrian detectors. This work was published at IROS 2017.